Re-usage of BIND’s RBT DB¶

Overview¶

Patched BIND has an API for database back-ends. Bind-dyndb-ldap re-implements big part of the API, but all functions required for DNSSEC support are missing and overall functionality is limited.

BIND’s native database implementation is called RBTDB (Red Black

Tree Database). RBTDB implements the whole API, supports DNSSEC,

IXFR etc.

The plan is to drop most of code from our database implementation and

re-use RBTDB as much as possible.

Discussion:

- Initial idea (May 2013)

- SyncRepl, periodical re-synchronization, on-disk caching (June 2013)

Use Cases¶

- DNSSEC support will require significantly less code in bind-dyndb-ldap

We will get support for these features ‘for free’:

- Wildcards

- IXFR can be supported without any magic in directory server (hopefully)

- Cache non-existing records, i.e. do not repeat LDAP search for each query

Design¶

For each LDAP DB maintained by bind-dyndb-ldap: Create internal RBTDB instance and hide it inside LDAP DB instance. E.g.:

typedef struct { dns_db_t common; isc_refcount_t refs; ldap_instance_t *ldap_inst; + dns_db_t *rbtdb; } ldapdb_t;

- The new instance will be empty, i.e. without any data. It has to be populated with records from LDAP.

Remove our implementation of all functions in

ldap_driver.cand turn most of functions into thin wrappers around RBTDB:static isc_result_t allrdatasets(dns_db_t *db, dns_dbnode_t *node, dns_dbversion_t *version, isc_stdtime_t now, dns_rdatasetiter_t **iteratorp) { ldapdb_t *ldapdb = (ldapdb_t *) db; REQUIRE(VALID_LDAPDB(ldapdb)); + return dns_db_allrdatasets(ldapdb->rbtdb, node, version, now, iteratorp); - [our implementation] }

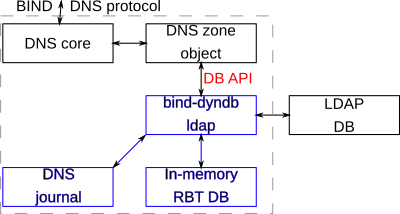

Block diagram follows. Blue parts are controlled by bind-dyndb-ldap:

The problem is how to dump data from LDAP to the internal/hidden RBTDB instance and how to maintain consistency when changes in LDAP are made. There are several problems:

Initial database synchronization¶

Fortunatelly, 389 DS team decided to support RFC 4533 (so-called syncrepl, 389 DS ticket #47388). This will save us a lot of headaches caused by persistent search deficiencies.

The current plan is to use refreshAndPersist mode from RFC 4533.

This allows us to store syncCookie returned from LDAP server and resume synchronization process after restart/re-connection etc. As a result, we don’t need to dump content of the whole database during each BIND restart.

Syncrepl puts a new requirement on the LDAP client: Bind-dyndb-ldap has

to be able to map entryUUID to the associated entry in RBTDB.

We can create auxiliary RBTDB and store mapping entryUUID=>DNS

name mapping inside it. This RBTDB will stored to and loaded from

filesystem as any other RBTDB.

Entry renaming/moddn handling¶

| Ticket | Summary |

|---|---|

| #123 | LDAP MODRDN (rename) on records is not supported |

SyncRepl protocol may represent MODRDN operation as modification to ‘DN’ attribute while preserving LDAP object’s UUID. The only way to find out old name of the renamed entry is to store LDAP UUID along with the entry.

The entry was renamed if received change notification contains an

entryUUID and some DN, but particular entryUUID is already

mapped to some DNS name which doesn’t match the name derived directly

from DN.

In that case, the old name will be deleted from RBTDB completely and the new entry will be filled with the data.

This feature depends on ticket:151. Following information needs to be stored inside MetaDB:

LDAP UUID-> (DNS zone name, DNS FQDN) mapping

Condition for LDAP MODRDN detection is:

if (LDAP UUID is in MetaDB &&

(dn_to_dnsname(LDAP DN) != DNS names in MetaDB))

{

LDAP MODRDN detected

delete old DNS names

create new DNS names

}

else

{

ordinary LDAP ADD/MOD/DEL detected

}

Run-time changes made directly at LDAP level¶

The content of changed LDAP entry is received by the plugin via syncrepl. The plugin has to synchronize records in RBTDB with received entry.

DNS dynamic updates¶

We can intercept calls to dns_db_addrdataset() and

dns_db_deleterdataset(), modify LDAP DB and then modify RBT DB. The

entry change notification (ECN) from LDAP will be propagated back to

BIND via persistent search and then applied again (usually with no

effect).

Race conditions¶

There is race condition potential. E.g. multiple successive changes in single entry (i.e. DNS name) done by BIND:

- First change from BIND written to LDAP and RBTDB

- Second change from BIND written to LDAP and RBTDB

- First ECN is received from LDAP by BIND: RBTDB is synchronized to state denoted by the ECN, second change is discarded.

- Second entry change notification is received and consistency is restored.

The other option is to not write directly to RBTDB, but this way have other problem:

- LDAP DB is updated by BIND, but RBT DB is not updated at the same

time.

- Queries between the moment of update and ECN from LDAP will return old results.

- BIND receives ECN from LDAP.

- The change is applied to RBT DB.

- Clients can see new data from this moment.

Update filtering based on modifiesName attribute is not feasible,

because modifiersName is not updated on

delete.

Re-synchronization¶

| Ticket | Summary |

|---|---|

| #125 | Re-synchronization is not implemented |

During initial discussion we decided to implement periodical LDAP->RBTDB re-synchronization. It should ensure that all discrepancies between LDAP and RBTDB will be solved eventually.

We likely need the re-synchronization mechanism itself even if it is not

run periodically because a reconnection to LDAP can require

re-synchronization if SyncRepl Content

Update fails with

e-syncRefreshRequired error.

Principle¶

- Maintain monotonic generation number. It can be number of reconnections to LDAP server counted from plugin start.

- Use Design/MetaDB to store generation number for all DNS objects.

- Do full

cn=dnssub-tree LDAP search for all objects in DNS tree. - When an object from LDAP is processed, set it’s generation number in MetaDB to current generation number.

- Overwrite existing data in DNS database with data from LDAP.

- When all LDAP search results from step 2. are processed, iterate over

whole MetaDB and delete objects which has

object generation number < current generation number.

- Note: Be careful with cases where one object with

UUID1and namename.example.was deleted but another object withUUID2and namename.example.was added. In has to be detected to prevent deletion of DNS object equivalent toUUID2.

Questions¶

- What about dynamic updates during re-synchronization?

How to detemine re-synchronization interval?¶

Provide resync_interval_min and resync_internal_max

configuration options. Start with some initial value (= minimal?) and

double the interval if no discrepancies were found. Divide the interval

by 2 in case of any error. New value has be belong into interval

[resync_internal_min, resync_internal_max].

- Question: Is it a good idea?

Implementation¶

Initial implementation has some limitations:

- #123

LDAP MODRDN (rename) on records is not supported

- #124

Startup with big amount of data in LDAP is slow

- #125

Re-synchronization is not implemented

- #126

Support per-server _location records for FreeIPA sites

- #127

Zones enabled at run-time are not loaded properly

- #128

Records deleted when connection to LDAP is down are not refreshed properly

- #134

Child DNS zone is corrupted if parent zone is hosted on the same server

Feature Management¶

This feature doesn’t require special management. Options directory,

resync_interval_min and resync_interval_max are provided for

special cases. Default values should work for all users.

Major configuration options and enablement¶

New options in /etc/named.conf:

directoryspecifies a filesystem path where cached zones are stored.resync_interval_minandresync_interval_maxcontrol periodical re-synchronization as described above.

Existing SOA expiry field in each zone specifies longest time

interval when data from cache can be served to clients even if

connection to LDAP is down.

Replication¶

No impact on replication.

Updates and Upgrades¶

No impact on updates and upgrades.

Dependencies¶

This feature depends on 389 with support for RFC 4533 (so-called syncrepl). See 389 DS ticket #47388.

External Impact¶

No impact on other development teams and components.

Backup and Restore¶

Path specified by directory option has to exist and be writeable by

named. It is not necesary to backup content of the cache.

Test Plan¶

Test scenarios that will be transformed to test cases for FreeIPA Continuous Integration during implementation or review phase.

RFE Author¶

Petr Spacek <pspacek@…